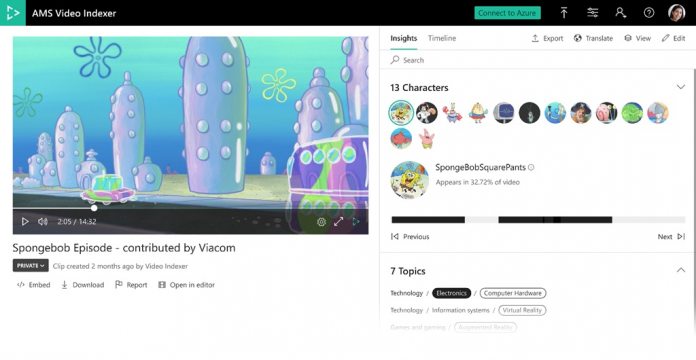

Video Indexer was launched at IBC a year ago and automatically creates metadata through user media. It achieves this by transcribing the video in any of 10 languages supported in Microsoft Translator. This allows the indexer to add subtitles to the file. VI also leverages facial recognition technology to detect all people in the video. If the person/s are well known enough, the app will assign a name tag. Alternatively, users can add the name themselves. At IBC 2019 today, Microsoft celebrated VI’s anniversary with two new preview features. The first is animated character recognition. Microsoft explains standard AI models do not handle animated faces well, because they are typically designed for human faces. Azure Media Services Video Indexer is combining with Azure Customer Vision to create new AI models that automatically detect animated characters: “These models are integrated into a single pipeline, which allows anyone to use the service without any previous machine learning skills. The results are available through the no-code Video Indexer portal or the REST API for easy integration into your own applications.”

Multilingual Transcription

Microsoft has also brought multilingual detection and transcription to preview in Azure Media Services Video Indexer. Audio mixed across multiple language is increasingly common. Microsoft says most text-to-speech solutions need language to be specified before transcription. “Our new automatic spoken language identification for multiple content feature leverages machine learning technology to identify the different languages used in a media asset. Once detected, each language segment undergoes an automatic transcription process in the language identified, and all segments are integrated back together into one transcription file consisting of multiple languages.”