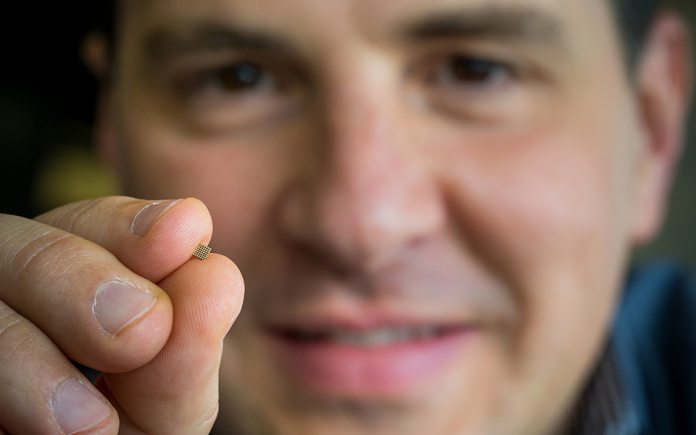

Called Embedded Learning Library (ELL), Microsoft Research describes how it can embed AI onto a chip that is the size of a breadcrumb. With the technology, developers would be able to build machine-learning onto embedded platforms. For example, Raspberry Pi would be able to run artificial intelligence, making machine learning more accessible. “The dominant paradigm is that these devices are dumb,” said Manik Varma, a senior researcher with Microsoft Research India and a co-leader of the project. “They sense their environment and transmit their sensor readings to the cloud where all of the machine learning happens. Unfortunately, this paradigm does not address a number of critical scenarios that we think can transform the world.” The company such the AI can run without a connection to the internet. By adding this ability, Microsoft Research removes bandwidth restraints and network latency. Storing the AI on an embedded device also means privacy is heightened and battery drain is limited. In its announcement, Microsoft Research says the possibilities are many. All sorts of devices could be built with the smaller processor method.

Democratizing AI

The researchers imagine all sorts of intelligent devices that could be created with this method, from smart soil-moisture sensors deployed for precision irrigation on remote farms to brain implants that warn users of impending seizures so that they can get to a safe place and call a caregiver. “Giving these powerful machine-learning tools to everyday people is the democratization of AI,” said Saleema Amershi, a human-computer-interaction researcher in the Redmond lab. “If we have the technology to put the smarts on the tiny devices, but the only people who can use it are the machine-learning experts, then where have we gotten?” Embedded Learning Library’s APIs are compatible with either from C++ or Python. You can download the early preview from GitHub.